Powering AI Workloads with Arcfra: A Unified Full-Stack Platform for the AI Era

As enterprises embrace Large Language Models (LLMs) to build AI applications or deploy pre-trained models into production, the demands on IT infrastructure are rapidly increasing. But is traditional infrastructure keeping up? AI workloads require more than just raw computing power — they need efficient GPU allocation, dynamic resource scheduling, seamless integration between VMs and containers, and high-performance storage for diverse data types.

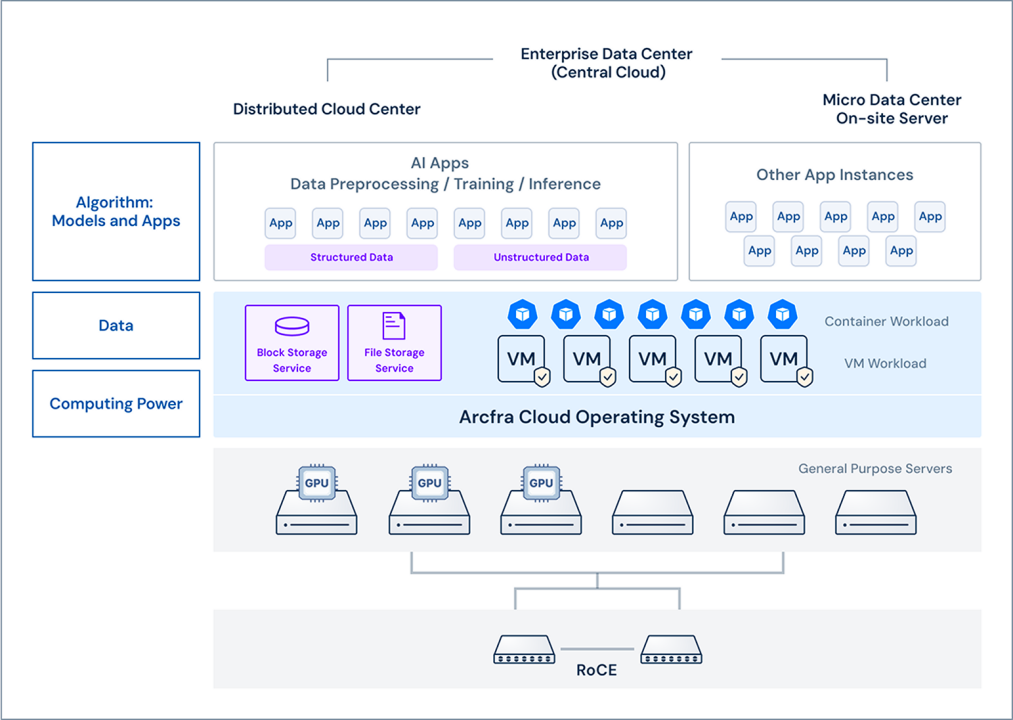

The Arcfra Enterprise Cloud Platform (AECP) provides a full-stack AI infrastructure, unifying compute, workloads, and storage to meet these evolving demands. With flexible CPU and GPU resource management, seamless workload deployment across virtualized and containerized environments, and a low-latency, high-throughput storage system, AECP ensures that enterprises can scale AI applications efficiently — without bottlenecks.

Enterprise-Grade AI Applications: Key IT Infrastructure Demands

Currently, enterprise AI scenarios may not require extensive Tflops on GPUs for training LLMs, but they still place considerable demands on IT infrastructure in terms of performance, resource utilization, support for containerized environments, and storage of diverse data types.

Flexible Scheduling of Compute and Storage Resources

During the development and testing of AI applications, development teams often require varying amounts of GPU resources, with certain tasks not fully utilizing the entire GPU. Likewise, when running AI applications, the demand for GPU and storage resources may fluctuate frequently. Therefore, IT infrastructure should allow flexible slicing and scheduling of compute and storage resources, as well as the integration of high-performance CPU and GPU processors. This approach can enhance resource utilization while accommodating the diverse requirements of different applications and development tasks.

High-Performance and Low-Latency Storage System

Fine-tuning LLMs using industry-specific data requires significant GPU resources. Accordingly, storage systems should offer high-performance and low-latency support for parallel tasks. The workflow of AI applications comprises several stages that involve extensive data read and write across multiple data sources. For example, the raw data might be pre-processed before it could be utilized for fine-tuning and inference. Additionally, the text, voice, or video data produced during inference must be stored and exported. Meeting these requirements entails a storage solution that delivers high-speed performance.

Storage of Various Data Types and Formats

AI applications often need to process structured data (such as databases), semi-structured data (like logs), and unstructured data (such as images and text) simultaneously. This underscores the need for IT infrastructure that supports diverse data storage. Furthermore, storage requirements for AI applications can vary throughout the workflow. For instance, certain stages may necessitate fast storage responsiveness, while others may prioritize shared reading and writing of data.

Unified Support for VM and Container Workloads

As Kubernetes features flexible scheduling, an increasing number of AI applications are being deployed on containers and cloud-native platforms. However, as many traditional applications are still expected to run on VMs in the future, there is a rising demand for IT infrastructure capable of supporting and managing both VMs and containers.

Additionally, to enable agile deployment and scaling of AI applications as businesses grow, IT infrastructure should offer flexible scalability, simplified operations and maintenance (O&M), and rapid deployment capabilities.

AECP AI Infrastructure Solution

AECP can provide converged compute and storage resources for AI applications deployed in virtualized and Kubernetes environments:

- Convergence of compute resources (CPU and GPU): Hosts in the AECP cluster can be flexibly configured with different numbers and models of GPU processors, allowing workloads to obtain CPU and GPU resources on demand. Specifically, AECP supports GPU pass-through and vGPU, along with technologies such as MIG and MPS. These features enable users to slice GPU processors flexibly, enhancing GPU utilization and the efficiency of AI applications in virtualized and containerized environments.

- Convergence of virtualized and containerized workloads: Through Arcfra Kubernetes Engine (AKE), AECP unifies support for VM-based and container-based AI applications, addressing diverse performance, security, and agility needs. Features like GPU pass-through, vGPUs, and elastic resource management via Kubernetes ensure efficient resource allocation and scalability.

- Convergence of diverse data storage: AECP features a distributed storage solution with proprietary IP. Storage of AECP supports various storage media, addressing diverse data storage requirements of AI applications. Additionally, it delivers exceptional and stable storage performance, particularly for high I/O use cases.

Convergence of Compute Resources: Flexible Scheduling of CPU and GPU Resources on an Integrated Computing Platform

While LLM-based AI applications require the parallel computing capability of GPUs, the computing resource pools used for generative AI applications should cater to different types of workloads to reduce unnecessary scheduling across CPU and GPU resource pools.

AECP allows servers with different components to be deployed within the same cluster. This means that each host can be configured with different numbers and models of CPU and GPU processors, supporting AI applications and non-AI applications with an integrated computing resource pool.

With GPU pass-through and vGPU support, AECP can deliver GPU capability to HPC-centric applications (such as artificial intelligence, machine learning, image recognition processing, VDI, etc.) running in the virtualized environment. The vGPU feature enables users to slice and allocate different GPU resources on demand. This allows multiple VMs to share one GPU, increasing resource utilization while improving AI application performance.

AECP also supports NVIDIA MPS and MIG technologies, allowing users to benefit from the flexible sharing of GPU resources.

In addition, Arcfra has collaborated with other leading enterprises in the AI industry to introduce a joint solution for GPU pooling tailored for AI applications on AECP. This solution can achieve fine-grained slicing and scheduling of parallel computing resources. For example, users can slice a GPU into 0.3 units and combine it with sliced GPUs on other nodes. Moreover, this solution facilitates the management of heterogeneous chips, assisting enterprises in enhancing computing resource utilization and management flexibility.

Convergence of Workloads: Supporting Both Virtualized and Containerized Workloads

With AKE, users can use AECP to support both virtualized and containerized AI applications. Given the differing resource consumption models of various AI workloads, a converged deployment ensures efficient resource allocation while meeting the performance, security, scalability, and agility needs of diverse applications. Ultimately, this strategy optimizes resource utilization and reduces the total cost of ownership (TCO).

Currently, AKE supports features such as GPU pass-through, virtual GPUs (vGPUs), time-slicing, multi-instance GPUs, and multi-process service. Additionally, it supports the elastic and flexible management of GPU resources through Kubernetes, meeting the parallel computing requirements of AI applications in container environments while optimizing GPU resource utilization and management flexibility.

Convergence of Storage: Providing High Performance and Reliability for AI Applications on Data Processing and Analysis

AI applications rely on various types of data and require processes such as data collection, cleaning, categorization, inference, output, and archiving during use. Thus, the demand placed on storage systems for high-speed access can be easily imagined.

With proprietary distributed storage, AECP can provide excellent performance and stability for AI applications through technical features such as I/O locality, storage tiering, Boost mode, RDMA support, and resident cache.

AECP also adapts to a wide range of storage hardware devices, spanning from cost-effective mechanical hard disks to high-performance NVMe storage devices. For instance, cold or warm data can be stored on low-cost magnetic disks. In cases where there’s a surplus of cold data left after AI tasks, with replication or backup technologies, users can store it in a more budget-friendly storage system, thus achieving a balance between system performance and overall costs.

Furthermore, AECP offers flexible scalability, enabling users to scale capacity in line with the demands of AI applications, resulting in linear performance growth as capacity increases. Enterprises can also utilize AECP to support AI applications deployed at edge sites or factories, ensuring that data processing and computing tasks occur at the same site and node. This approach effectively minimizes latency stemming from cross-node communication.

Key Advantages of Arcfra AI Solution

High-Performance, Reliable Storage

By integrating the Hypervisor with distributed storage, AECP minimizes latency and optimizes data transmission across sites of any scale. Its enterprise-grade storage ensures the secure, high-speed processing of structured, semi-structured, and unstructured data — powering AI applications with fast, reliable access to critical datasets.

Scalable, Open Architecture

AECP’s software-defined infrastructure allows for seamless scalability, adapting to the growing demands of AI workloads. Hardware-agnostic and highly flexible, it supports diverse server configurations and efficient scheduling of high-performance CPUs and GPUs — ensuring optimal resource allocation for AI-driven tasks.

Unified Support for VMs & Containers

AECP enables efficient deployment, management, and scaling of both virtualized and containerized AI workloads. By providing a consistent, integrated runtime environment, it ensures seamless workload orchestration across different infrastructures and locations.

Simplified & Intelligent O&M

With centralized management of compute and storage resources, AECP streamlines infrastructure operations across multiple sites. Its unified O&M framework enhances efficiency, automation, and visibility, reducing complexity while optimizing resource utilization.

For more information about AECP, please visit https://www.arcfra.com/ and https://docs.arcfra.com/.

Arcfra simplifies enterprise cloud infrastructure with a full-stack, software-defined platform built for the AI era. We deliver computing, storage, networking, security, Kubernetes, and more — all in one streamlined solution. Supporting VMs, containers, and AI workloads, Arcfra offers future-proof infrastructure trusted by enterprises across e-commerce, finance, and manufacturing. Arcfra is recognized by Gartner as a Representative Vendor in full-stack hyperconverged infrastructure. Learn more at www.arcfra.com.